Feb 3, 2026

ActionStreamer

Smart Glasses in 2026

In 2026, smart glasses are settling into a new phase. They are no longer just speculative consumer gadgets or niche enterprise tools. Across consumer and industrial markets, the category is maturing around a shared idea: lightweight, head-mounted devices that can see, hear, and increasingly stream what the wearer experiences.

What separates a novelty from a real platform isn’t the display alone. It’s how well these glasses move media off the device and into live workflows.

The Smart Glasses Landscape Is Expanding

On the consumer and prosumer side, companies like Meta, Brilliant Labs, Xreal, and Snap with its Spectacles have pushed smart glasses toward everyday wear. These products focus on comfort, camera-first experiences, and lightweight augmented reality, often paired tightly with a phone for compute and connectivity.

At the same time, Amazon has explored multiple paths. Echo Frames introduced audio-first smart glasses, while the Amelia smart glasses effort signals continued experimentation around AI-driven, hands-free interfaces. Together, these approaches suggest smart glasses don’t need to look the same to succeed, but they do need to integrate seamlessly into daily workflows.

In more industrial and enterprise-oriented use cases, HTC and Vuzix have focused on ruggedized designs, enterprise software compatibility, and long-duration use. These glasses are increasingly deployed in environments where live video supports maintenance, inspections, training, and operational oversight.

The Hardware Reality: Chips Matter

Under the hood, smart glasses are defined as much by silicon as by optics. Most of these devices run on highly constrained chipsets designed to balance power consumption, heat, and performance. This is where platforms like the Qualcomm AR1 developer kit come into play.

The AR1 platform reflects a broader shift toward chips purpose-built for smart glasses. These systems-on-chip prioritize camera ingest, AI acceleration, and wireless connectivity while keeping power draw low enough for all-day wear. The tradeoff is clear: there is very little excess compute available for heavy video processing or inefficient streaming stacks.

As a result, smart glasses can capture high-quality video, but pushing that video off the device, live, reliably, and with low latency, remains one of the hardest problems to solve.

Streaming is the Bottleneck

Smart glasses live at the edge of the network, both literally and technically. They move between Wi-Fi, private cellular, and public 5G, often in environments where bandwidth fluctuates or drops entirely. At the same time, they must preserve battery life and avoid thermal throttling.

This makes traditional, consumer-grade streaming approaches a poor fit. Encoding, buffering, and retry logic designed for phones or laptops quickly overwhelm head-mounted devices. When that happens, latency spikes, frames drop, or streams fail altogether.

For enterprise deployments, especially, that failure is not acceptable. Live video is often the primary reason the glasses are worn in the first place.

Why the Media Uplink is the Differentiator

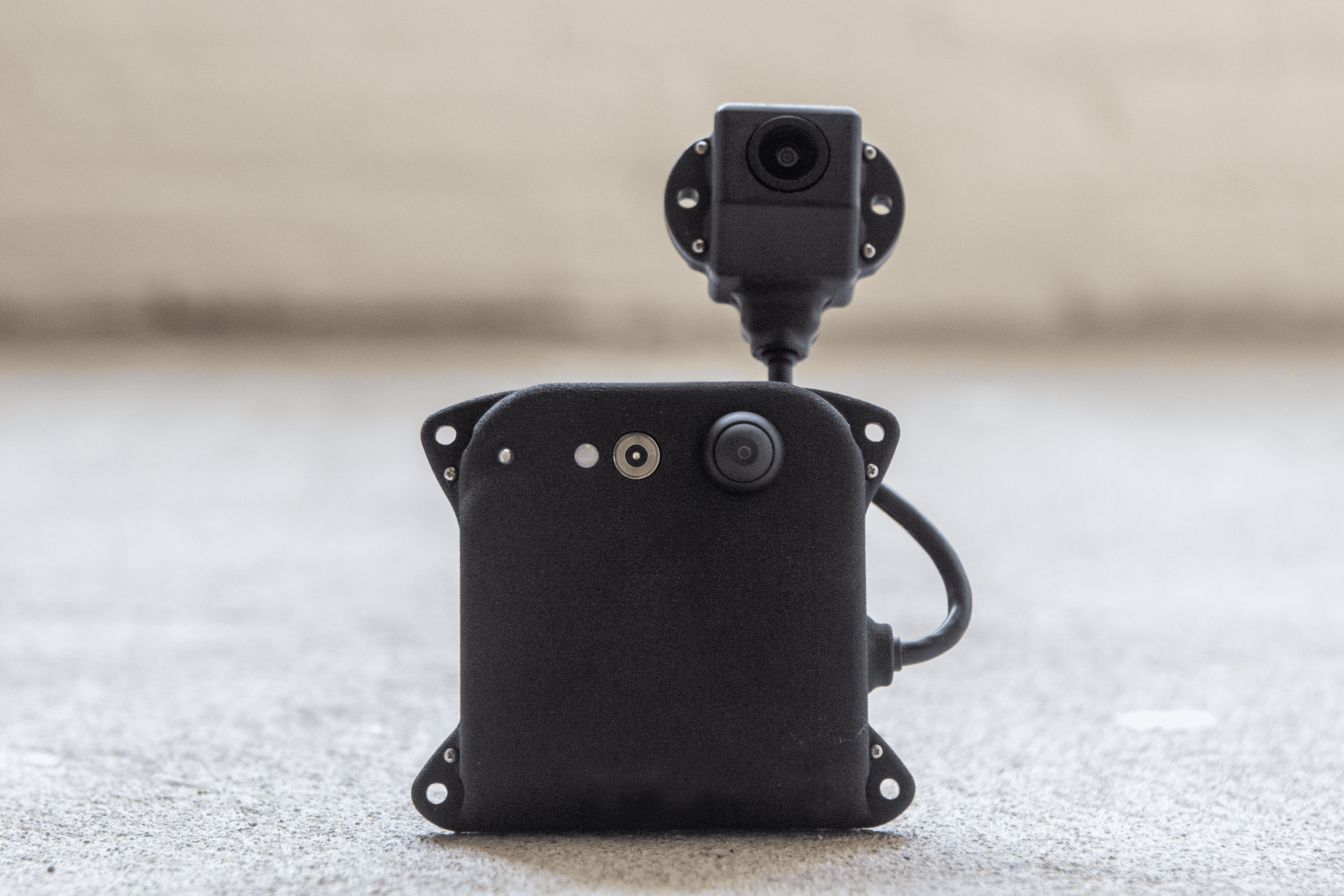

The media uplink is the layer responsible for moving video, audio, and telemetry off the glasses and into the rest of the system. It determines whether smart glasses behave like fragile demos or dependable tools.

A purpose-built uplink minimizes on-device overhead, adapts in real time to changing network conditions, and preserves continuity even when connectivity is imperfect. It also enables streams to be routed to multiple viewers, recorded for later review, or sent into AI pipelines without manual intervention.

As smart glasses scale into real operations, this layer becomes more important than the display itself.

Where ActionStreamer Fits

This is where ActionStreamer specializes.

ActionStreamer focuses on the media uplink and live-streaming infrastructure required to make smart glasses work in the real world. The platform is designed to run efficiently on low-powered devices, handling live video, audio, and telemetry without forcing heavy processing onto the glasses themselves. It is built for unstable networks, real-time routing, and integration into operational systems and AI workflows.

Rather than competing with smart-glasses manufacturers, ActionStreamer complements them by solving the hardest part of deployment: reliable, enterprise-grade live media from the edge.

Looking Ahead

As the category continues to mature, it’s likely we’ll see more attention from major technology companies. Apple and Google have already laid groundwork across wearables, silicon, and spatial computing, and smart glasses are a natural extension of those efforts.

What will determine success is not just who builds the most elegant hardware, but who can support dependable live media on devices that are inherently constrained. In that future, smart glasses won’t be judged by what they display, but by how effectively they connect people, systems, and intelligence in real time.

ActionStreamer

[ Blog ]